How do you navigate the technology that enables 3D depth sensing and machine vision? Of the many kinds of 3D depth sensor technologies on the market, this blog article will explain the differences between stereo, structured light and time-of-flight, as they are the most prevalent technologies right now.

What is stereo?

Stereo technology calculates distance using two cameras, with each one seeing the scene from a slightly different angle. The resulting parallax enables triangulation calculations to determine the distance.

The baseline, or the distance between the imagers, determines the depth the system can read. If the baseline is too long, objects too close cannot be seen. If it is too short, the 3D data becomes inaccurate since the parallax is so close together and there is too little difference between the views.

In short, there is an ideal baseline for the distance you are trying to measure. If the application needs to see 3D both close up and far away, stereo systems are not a good solution unless the cameras can pivot (like eyes), which would be expensive. Stereo systems can be passive, i.e. not requiring any additional light source and simply taking in light from the ambient surroundings, like our eyes. And like our eyes, the passive systems cannot see in the dark, and are unable to determine distance if the environment is contrast-free. For example, a stereo system cannot determine the distance to an all-white wall since each imager is seeing the same thing – a white wall – and there is no parallax data to obtain 3D information. To overcome these problems stereoscopic systems can add a light source, usually in the IR range, to become active stereoscopic systems. This makes the system more expensive but enables it to see in the dark. These systems typically also emit a pattern, or textures, that makes a calculation possible in a low-contrast environment.

Stereo systems are the simplest to design, but their computation requirements are quite high as a custom ASIC or very high-end processor is needed. This significantly adds to the overall cost of the entire electronic system.

What is structured light (SL)?

This technology uses an infrared transmitter that emits a fixed pattern into space. An infrared sensitive imager then looks at the pattern distortion and interprets changes in the pattern to calculate distance. Like stereo, structured light requires a baseline or distance between the emitter and receiver to obtain a 3D depth map. While this technology has fewer limitations than stereo, it still requires more space. Another limitation is its outdoor light performance. Since sunlight interferes with the structured light pattern, this technology has problems in outdoor applications.

Implementation of the IR pattern in a structured light system comes in two broad categories:

- Spatial uses a fixed pattern to project: A laser IR emitter is projected through a diffractive optical element (DOE) to create a single, unchanging pattern.

- Temporal uses a changing pattern, which can improve accuracy. However, since it uses a changing illuminator –a digital light processor or other projector – it costs more in material terms.

Like stereo, a true structured light solution uses triangulation to interpret 3D. In the case of stereo, the two imagers and the object being detected create a triangle, from which depth data can be inferred. Structured light also uses triangulation – the triangle created by the emitter, detector and the object. As in all cases of triangulation, high computational requirements are needed, so like stereo, structured light has to have an ASIC or high-end processor.

What is time of flight (ToF)?

As the name suggests, this method measures the time it takes for light to emit from the camera, bounce off objects and return to the camera. This technology measures distance directly, rather than calculating it using triangulation. Since it is not using triangulation, there is no baseline like stereo or structured light, so it can be much smaller than other 3D systems. The light source is modulated, i.e. has a distinct pattern that can be differentiated from sunlight.

There are two main ToF methods:

- Direct ToF: This method directly measures the time of the light path. Since light is fast, direct ToF is difficult and expensive. The most common direct ToF known as LIDAR (Light Detection and Ranging) is being used in the development of most exterior autonomous driving projects because it is good at long distances. A solid-state version known as SPAD (Single Photon Avalanche Detector) is used for simple range finders and experiments are ongoing to take SPAD to higher level functions.

- Indirect ToF/Phase ToF: Light has a frequency, which determines its wavelength, and travels in a sine wave. Instead of measuring the flight path directly, Phase ToF looks at the phase change of the light from the time it leaves the camera to when it returns. This method allows for smaller, more integrated, and less expensive systems. Infineon/pmd develop, produce and provide sensors and camera modules in this field.

Comparing the three technologies

While other 3D technologies utilize complex algorithms to calculate an object’s distance from the camera lens, the ToF image sensor chip delivers more accurate measurements by capturing infrared light as it is reflected off the subject. As a result, ToF is better at dealing with ambient light, reducing the workload on the application processor and thus lowering power consumption. Thanks to its fast response speed, ToF technology is widely used in various biometric authentication methods, such as face recognition. An added benefit of this technology over stereo and SL is that ToF sees objects in 3D and is not affected by light from external sources. It delivers an excellent recognition rate, both indoors and out, and is thus ideal for implementation in AR and VR applications.

Why (pmd) ToF?

It is superior to SL and stereo in sunlight as it has a high dynamic range with integrated suppression of background illumination (SBI). Electrons generated by sunlight are subtracted by SBI circuitry for each pixel so that the full dynamic range of the sensor is available for active light.

- ToF technology at the system level

- Its small size does not require a baseline, it is mechanically robust and as there are no moving parts, mechanical shock does not influence data. It also gives you rich data – depth data + confidence + IR intensity (gray value) – that is perfectly synced in time and space as it is calculated from same raw data set.

- ToF technology at the chip level

- It is a single-chip solution with a highly integrated imager offering the benefits of low cost, small size and flexibility.

- ToF technology in the software

- Its flexible operation modes, superior detection of artifacts – multipath detection, straylight detection, detection of pixel saturation and detection of flying pixels – and many successfully commercialized products have a proven software framework.

- ToF technology in production

- It is calibrated fast (<10 sec) and gives you lifetime calibration – a once-off procedure. Commercialized products have proven its repeatability in multiple mass-produced items.

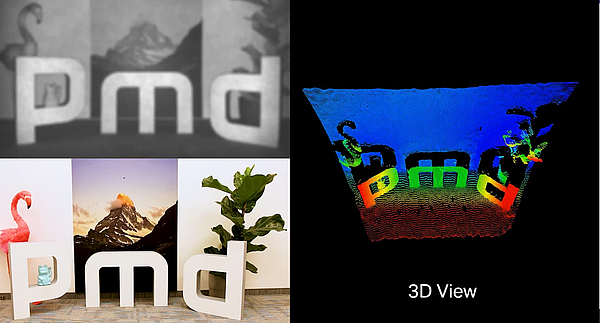

Our cameras are a great way to familiarize yourself and work with ToF technology. Moreover, our cameras come with pmd´s powerful software suite Royale, which contains all the logic to operate the 3D camera. Also included is the visualization tool Royale Viewer. Moreover, Royale delivers you a 3D point cloud for every pixel of the observed scene – plus an IR gray value and confidence as well.

Installing the camera with the PC doesn't take long either. All you have to do is download and install the software, connect the camera to your PC via a USB-C cable, and you're ready to go. For further development the installation folder also contains samples and codes to create your own software for your specific use case.

If you have any questions or would like to learn more, please contact us. You can find our iToF camera development kits here: 3d.pmdtec.com/en/